Setting up Falco on OpenShift 4.12

- - 9 min read

Falco is a security tool to monitor kernel events like system calls to provide real-time alerts. In this post I'll document the steps taken to get Open Source Falco running on an OpenShift 4.12 cluster.

UPDATE: Use the falco-driver-loader-legacy image for OpenShift 4.12 deployments.

First Try

We will use the Falco Helm chart version 3.8.0 for our first try of setting up Falco on our OpenShift cluster.

This is our values file:

driver:

kind: ebpf

falco:

json_output: true

json_include_output_property: true

log_syslog: false

log_level: info

falcosidekick:

enabled: true

webui:

enabled: trueWe would like to use the eBPF driver to monitor kernel events, enable falco sidekick, which is used to route events and the falco sidekick UI for easier testing.

Because of reasons we leverage kustomize to render the helm chart. The final kustomize config is here (after fixing all problems mentioned below).

So after deploying the chart via ArgoCD (another story), we have the following pods:

$ oc get pods -n falco

NAME READY STATUS RESTARTS AGE

falco-4cc8j 0/2 Init:CrashLoopBackOff 5 (95s ago) 4m31s

falco-bx87j 0/2 Init:CrashLoopBackOff 5 (75s ago) 4m29s

falco-ds9w6 0/2 Init:CrashLoopBackOff 5 (99s ago) 4m30s

falco-falcosidekick-ui-redis-0 1/1 Running 0 4m28s

falco-gxznz 0/2 Init:CrashLoopBackOff 3 (14s ago) 4m30s

falco-vtnk5 0/2 Init:CrashLoopBackOff 5 (98s ago) 4m29s

falco-wbn2k 0/2 Init:CrashLoopBackOff 5 (80s ago) 4m29shm, not so good. Seems like some initContainers are failing.

Let's check the falco-driver-loader initContainer:

* Setting up /usr/src links from host

* Running falco-driver-loader for: falco version=0.36.1, driver version=6.0.1+driver, arch=x86_64, kernel release=4.18.0-372.73.1.el8_6.x86_64, kernel version=1

* Running falco-driver-loader with: driver=bpf, compile=yes, download=yes

* Mounting debugfs

mount: /sys/kernel/debug: permission denied.

dmesg(1) may have more information after failed mount system call.

* Filename 'falco_rhcos_4.18.0-372.73.1.el8_6.x86_64_1.o' is composed of:

- driver name: falco

- target identifier: rhcos

- kernel release: 4.18.0-372.73.1.el8_6.x86_64

- kernel version: 1

* Trying to download a prebuilt eBPF probe from https://download.falco.org/driver/6.0.1%2Bdriver/x86_64/falco_rhcos_4.18.0-372.73.1.el8_6.x86_64_1.o

curl: (22) The requested URL returned error: 404

Unable to find a prebuilt falco eBPF probe

* Trying to compile the eBPF probe (falco_rhcos_4.18.0-372.73.1.el8_6.x86_64_1.o)

expr: syntax error: unexpected argument '1'

make[1]: *** /lib/modules/4.18.0-372.73.1.el8_6.x86_64/build: No such file or directory. Stop.

make: *** [Makefile:38: all] Error 2

mv: cannot stat '/usr/src/falco-6.0.1+driver/bpf/probe.o': No such file or directory

Unable to load the falco eBPF probeSeems to be an issue with a missing directory /lib/modules/4.18.0-372.73.1.el8_6.x86_64/build.

And we told the helm chart to enable falco-sidekick and

falco-sidekick-ui, but where are they?

Let's check the events with oc get events as well, and what do we see?

8s Warning FailedCreate replicaset/falco-falcosidekick-7cfbbbf89f Error creating: pods "falco-falcosidekick-7cfbbbf89f-" is forbidden: unable to validate against any security context constraint: [provider "anyuid": Forbidden: not usable by user or serviceaccount, provider restricted-v2: .spec.securityContext.fsGroup: Invalid value: []int64{1234}: 1234 is not an allowed group, provider restricted-v2: .containers[0].runAsUser: Invalid value: 1234: must be in the ranges: [1000730000, 1000739999], provider "restricted": Forbidden: not usable by user or serviceaccount, provider "nonroot-v2": Forbidden: not usable by user or serviceaccount, provider "nonroot": Forbidden: not usable by user or serviceaccount, provider "hostmount-anyuid": Forbidden: not usable by user or serviceaccount, provider "machine-api-termination-handler": Forbidden: not usable by user or serviceaccount, provider "hostnetwork-v2": Forbidden: not usable by user or serviceaccount, provider "hostnetwork": Forbidden: not usable by user or serviceaccount, provider "hostaccess": Forbidden: not usable by user or serviceaccount, provider "falco": Forbidden: not usable by user or serviceaccount, provider "node-exporter": Forbidden: not usable by user or serviceaccount, provider "privileged": Forbidden: not usable by user or serviceaccount]

6s Warning FailedCreate replicaset/falco-falcosidekick-ui-76885bd484 Error creating: pods "falco-falcosidekick-ui-76885bd484-" is forbidden: unable to validate against any security context constraint: [provider "anyuid": Forbidden: not usable by user or serviceaccount, provider restricted-v2: .spec.securityContext.fsGroup: Invalid value: []int64{1234}: 1234 is not an allowed group, provider restricted-v2: .containers[0].runAsUser: Invalid value: 1234: must be in the ranges: [1000730000, 1000739999], provider "restricted": Forbidden: not usable by user or serviceaccount, provider "nonroot-v2": Forbidden: not usable by user or serviceaccount, provider "nonroot": Forbidden: not usable by user or serviceaccount, provider "hostmount-anyuid": Forbidden: not usable by user or serviceaccount, provider "machine-api-termination-handler": Forbidden: not usable by user or serviceaccount, provider "hostnetwork-v2": Forbidden: not usable by user or serviceaccount, provider "hostnetwork": Forbidden: not usable by user or serviceaccount, provider "hostaccess": Forbidden: not usable by user or serviceaccount, provider "falco": Forbidden: not usable by user or serviceaccount, provider "node-exporter": Forbidden: not usable by user or serviceaccount, provider "privileged": Forbidden: not usable by user or serviceaccount]Looks like a problem with OpenShifts Security Context constraints (SCC's).

Summary of problems

- The falco

DaemonSetfails to start pods because there is an issue with a missing directory - Falco Sidekick and Falco Sidekick UI fails to start because of Security Context Constraint (SCC) issues

Fixing the Falco daemonset

Falco tries to download a pre-compiled eBPF probe, fails and then tries to compile that probe for our host OS kernel. This fails with the message:

make[1]: *** /lib/modules/4.18.0-372.73.1.el8_6.x86_64/build: No such file or directory. Stop.As far as we know there are no kernel sources installed on RHCOS nodes in OpenShift. After a little bit of searching the interweb we found the following issue comment on Github:

So we need to enable the kernel-devel extension, the official docs are

here. It does not mention kernel-devel, but there's a knowledge base

article mentioning kernel-devel, so let's give it a try.

We deploy two MachineConfigs, one for worker and one for master nodes

to rollout the extension, the worker configuration looks like this:

apiVersion: machineconfiguration.openshift.io/v1

kind: MachineConfig

metadata:

labels:

machineconfiguration.openshift.io/role: worker

name: 99-worker-kernel-devel-extensions

spec:

extensions:

- kernel-develSee also our Kustomize configuration here.

As soon as we apply our MachineConfigs, OpenShift starts the rollout via MaschineConfigPool's:

$ oc get mcp

NAME CONFIG UPDATED UPDATING DEGRADED MACHINECOUNT READYMACHINECOUNT UPDATEDMACHINECOUNT DEGRADEDMACHINECOUNT AGE

master rendered-master-ce464ff45cc049fce3e8a63e36a4ee9e False True False 3 0 0 0 13d

worker rendered-worker-a0f8f0d915ef01ba4a1ab3047b6c863d False True False 3 0 0 0 13dWhen the rollout is done, let's restart all Falco DaemonSet pods:

$ oc delete pods -l app.kubernetes.io/name=falcoAnd check the status:

$ oc get pods

NAME READY STATUS RESTARTS AGE

falco-5wfnk 0/2 Init:Error 1 (3s ago) 7s

falco-66fxw 0/2 Init:0/2 1 (2s ago) 6s

falco-6fbc7 0/2 Init:CrashLoopBackOff 1 (2s ago) 8s

falco-8h8n4 0/2 Init:0/2 1 (2s ago) 6s

falco-falcosidekick-ui-redis-0 1/1 Running 0 18m

falco-nhld2 0/2 Init:CrashLoopBackOff 1 (2s ago) 6s

falco-xqv4b 0/2 Init:CrashLoopBackOff 1 (3s ago) 8sstill, the initContainers fail. Lets check the log again

$ oc logs -c falco-driver-loader falco-5wfnk

* Setting up /usr/src links from host

* Running falco-driver-loader for: falco version=0.36.1, driver version=6.0.1+driver, arch=x86_64, kernel release=4.18.0-372.73.1.el8_6.x86_64, kernel version=1

* Running falco-driver-loader with: driver=bpf, compile=yes, download=yes

* Mounting debugfs

mount: /sys/kernel/debug: permission denied.

dmesg(1) may have more information after failed mount system call.

* Filename 'falco_rhcos_4.18.0-372.73.1.el8_6.x86_64_1.o' is composed of:

- driver name: falco

- target identifier: rhcos

- kernel release: 4.18.0-372.73.1.el8_6.x86_64

- kernel version: 1

* Trying to download a prebuilt eBPF probe from https://download.falco.org/driver/6.0.1%2Bdriver/x86_64/falco_rhcos_4.18.0-372.73.1.el8_6.x86_64_1.o

curl: (22) The requested URL returned error: 404

Unable to find a prebuilt falco eBPF probe

* Trying to compile the eBPF probe (falco_rhcos_4.18.0-372.73.1.el8_6.x86_64_1.o)

Makefile:1005: *** "Cannot generate ORC metadata for CONFIG_UNWINDER_ORC=y, please install libelf-dev, libelf-devel or elfutils-libelf-devel". Stop.

make: *** [Makefile:38: all] Error 2

mv: cannot stat '/usr/src/falco-6.0.1+driver/bpf/probe.o': No such file or directory

Unable to load the falco eBPF probeSo this time we get another error, the culprit is the following line

Makefile:1005: *** "Cannot generate ORC metadata for CONFIG_UNWINDER_ORC=y, please install libelf-dev, libelf-devel or elfutils-libelf-devel". Stop.Back to searching the interweb only reveals an old issue, that should be fixed already.

So as a quick hack we modified the falco-driver-loader image to

contain libelf-dev and pushed to image to quay.

We then modified our falco helm configuration to use the updated image:

driver:

kind: ebpf

loader:

initContainer:

image:

registry: quay.io

repository: tosmi/falco-driver-loader

tag: 0.36.1-libelf-dev

falco:

json_output: true

json_include_output_property: true

log_syslog: false

log_level: info

falcosidekick:

enabled: true

webui:

enabled: trueNote the updated diver.loader.initContainer section.

Let's check the our pods again:

$ oc get pods

NAME READY STATUS RESTARTS AGE

falco-2ssgx 2/2 Running 0 66s

falco-5hqgg 1/2 Running 0 66s

falco-82kq9 2/2 Running 0 65s

falco-99zxw 2/2 Running 0 65s

falco-falcosidekick-test-connection 0/1 Error 0 67s

falco-falcosidekick-ui-redis-0 1/1 Running 0 31m

falco-slx5k 2/2 Running 0 65s

falco-tzm8d 2/2 Running 0 65sSuccess! This time the DaemonSet pods started successfully. Just note

that you have to be patient. The first start took about 1-2 minutes to

complete.

Let's check the logs of one DaemonSet pod just to sure:

oc logs -c falco-driver-loader falco-2ssgx

* Setting up /usr/src links from host

* Running falco-driver-loader for: falco version=0.36.1, driver version=6.0.1+driver, arch=x86_64, kernel release=4.18.0-372.73.1.el8_6.x86_64, kernel version=1

* Running falco-driver-loader with: driver=bpf, compile=yes, download=yes

* Mounting debugfs

mount: /sys/kernel/debug: permission denied.

dmesg(1) may have more information after failed mount system call.

* Filename 'falco_rhcos_4.18.0-372.73.1.el8_6.x86_64_1.o' is composed of:

- driver name: falco

- target identifier: rhcos

- kernel release: 4.18.0-372.73.1.el8_6.x86_64

- kernel version: 1

* Trying to download a prebuilt eBPF probe from https://download.falco.org/driver/6.0.1%2Bdriver/x86_64/falco_rhcos_4.18.0-372.73.1.el8_6.x86_64_1.o

curl: (22) The requested URL returned error: 404

Unable to find a prebuilt falco eBPF probe

* Trying to compile the eBPF probe (falco_rhcos_4.18.0-372.73.1.el8_6.x86_64_1.o)

* eBPF probe located in /root/.falco/6.0.1+driver/x86_64/falco_rhcos_4.18.0-372.73.1.el8_6.x86_64_1.o

* Success: eBPF probe symlinked to /root/.falco/falco-bpf.oEspecially the line

* Success: eBPF probe symlinked to /root/.falco/falco-bpf.olooks promising. So up to the next problem, getting falco-sidekick and falco-sidekick-ui running.

We also opened a bug report upstream to get feedback from the developers on this issue.

UPDATE

Andreagit97 was so nice mentioning in the issue above that actually there is an image with libelf-dev available, falco-driver-loader-legacy. We can confirm that this image fixes the problem mentioned above.

So this is our final falco helm chart values.yaml:

driver:

kind: ebpf

loader:

initContainer:

image:

repository: falcosecurity/falco-driver-loader-legacy

falco:

json_output: true

json_include_output_property: true

log_syslog: false

log_level: info

falcosidekick:

enabled: true

webui:

enabled: trueFixing falco-sidekick and falco-sidekick-ui

Remember pod startup actually failed because of the following event (check with oc get events):

.spec.securityContext.fsGroup: Invalid value: []int64{1234}: 1234 is not an allowed groupIt seems the sidekick pods want to run with a specific UID. The

default OpenShift Security Context Constraint (SCC) restricted

prohibits this.

Lets confirm our suspicion:

$ oc get deploy -o jsonpath='{.spec.template.spec.securityContext}{"\n"}' falco-falcosidekick

{"fsGroup":1234,"runAsUser":1234}

$ oc get deploy -o jsonpath='{.spec.template.spec.securityContext}{"\n"}' falco-falcosidekick-ui

{"fsGroup":1234,"runAsUser":1234}Bingo! securityContext is set to 1234 for both deployments. There is

another SCC that we could leverage, nonroot, which basically allows any

UID expect 0. We just need to get the ServiceAccount that

falco-sidekick and falco-sidekick-ui are actually using:

$ oc get deploy -o jsonpath='{.spec.template.spec.serviceAccount}{"\n"}' falco-falcosidekick

falco-falcosidekick

$ oc get deploy -o jsonpath='{.spec.template.spec.serviceAccount}{"\n"}' falco-falcosidekick-ui

falco-falcosidekick-uiSo falco-sidekick uses falco-sidekick as ServiceAccount and falco-sidekick-ui falco-sidekick-ui. Lets

grant both ServiceAccounts access to the nonroot SCC.

kind: ClusterRoleBinding

metadata:

name: falco-falcosidekick-scc:nonroot

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:openshift:scc:nonroot

subjects:

- kind: ServiceAccount

name: falco-falcosidekick

namespace: falco

- kind: ServiceAccount

name: falco-falcosidekick-ui

namespace: falcoWe've already added this file to our Kustomize configuration.

Let's trigger a redeployment by deleting the ReplicaSets of both deployments, they will be re-created automatically:

$ oc delete rs -l app.kubernetes.io/name=falcosidekick

$ oc delete rs -l app.kubernetes.io/name=falcosidekick-uiFinally let's confirm everything is up and running:

$ oc get deploy,ds,pods

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/falco-falcosidekick 2/2 2 2 5d

deployment.apps/falco-falcosidekick-ui 2/2 2 2 5d

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/falco 6 6 6 6 6 <none> 6d2h

NAME READY STATUS RESTARTS AGE

pod/falco-2ssgx 2/2 Running 0 21m

pod/falco-5hqgg 2/2 Running 0 21m

pod/falco-82kq9 2/2 Running 0 21m

pod/falco-99zxw 2/2 Running 0 21m

pod/falco-falcosidekick-7cfbbbf89f-qxwxs 1/1 Running 0 118s

pod/falco-falcosidekick-7cfbbbf89f-rz5lj 1/1 Running 0 118s

pod/falco-falcosidekick-ui-76885bd484-p7lqm 1/1 Running 0 2m18s

pod/falco-falcosidekick-ui-76885bd484-sfgh4 1/1 Running 0 2m18s

pod/falco-falcosidekick-ui-redis-0 1/1 Running 0 51m

pod/falco-slx5k 2/2 Running 0 21m

pod/falco-tzm8d 2/2 Running 0 21mTesting Falco

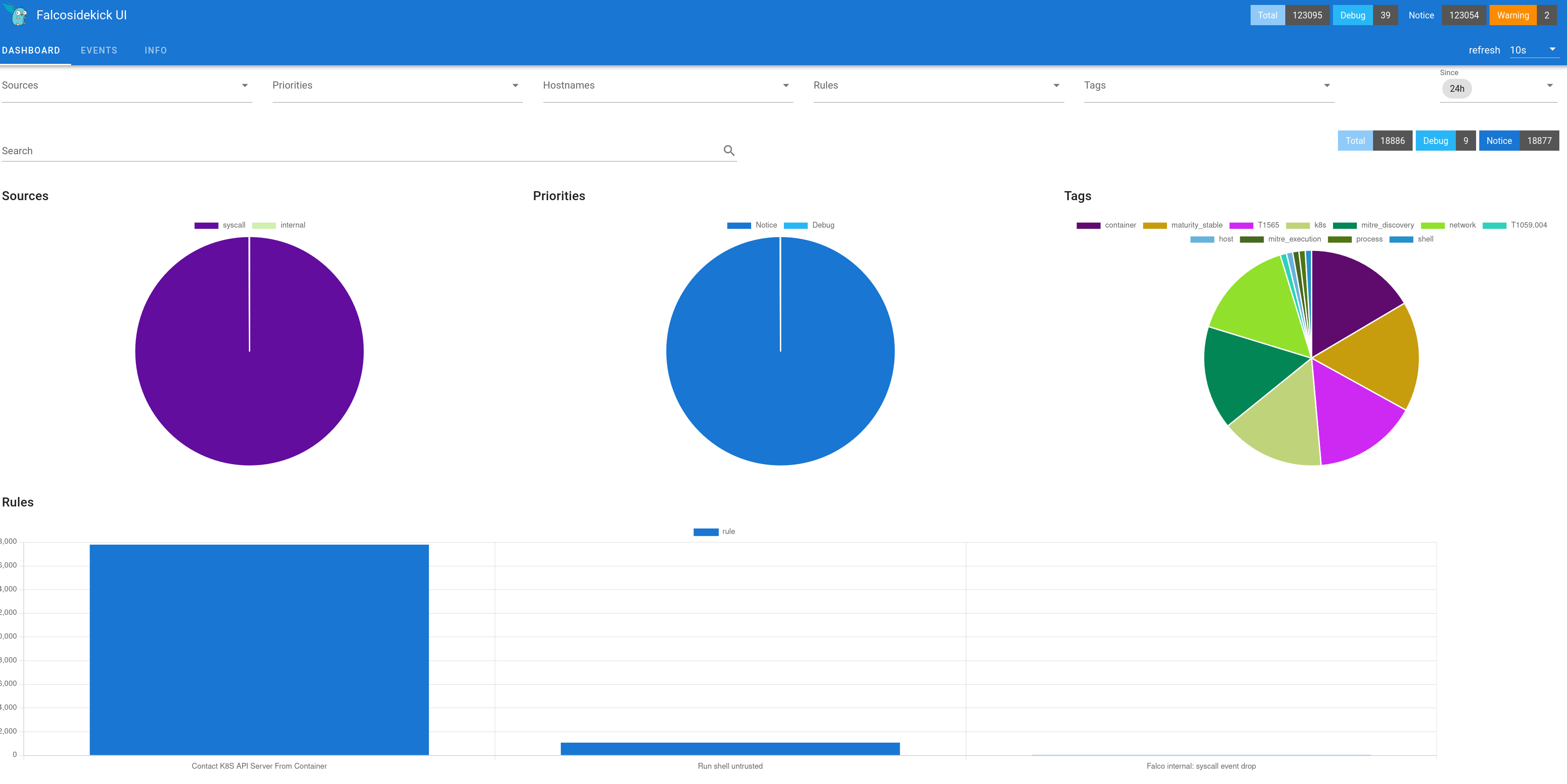

Now that everything seems to be running, lets do a quick test. First we will try to access the Falco Sidekick user interface.

Falco will not deploy a route for the UI automatically, instead we've created a Kustomize overlay with a custom route:

apiVersion: route.openshift.io/v1

kind: Route

metadata:

name: falco-falcosidekick-ui

namespace: falco

spec:

host: falcosidekick-ui.apps.hub.aws.tntinfra.net

port:

targetPort: http

tls:

termination: edge

to:

kind: Service

name: falco-falcosidekick-ui

wildcardPolicy: NoneAfter deploying the Route we can access the Falco UI with the hostname

specified in the route object. The default username seems to be

admin/admin which is kind of strange for a security tool, maybe that's

the reason Falco does not expose the UI per default.

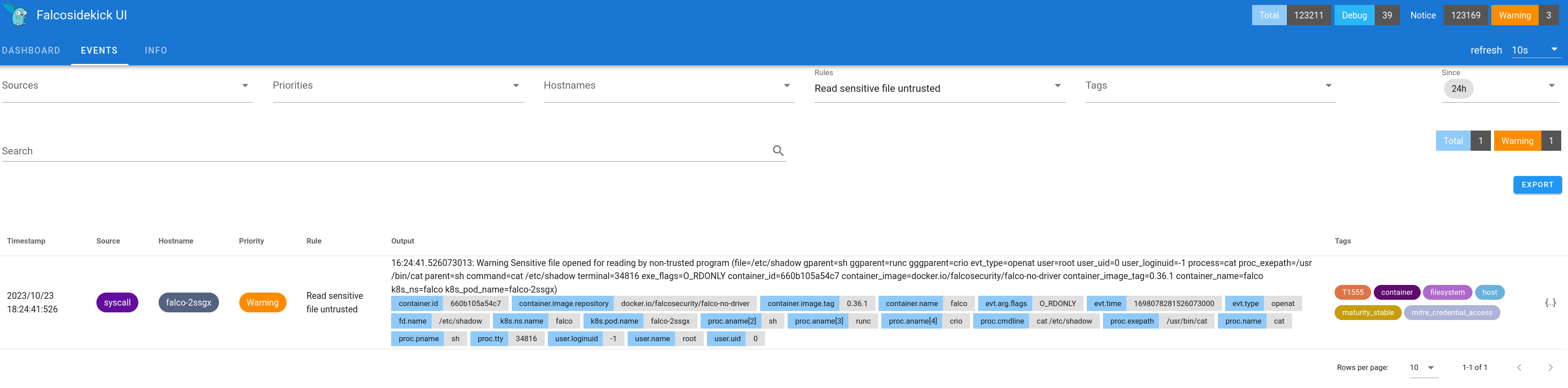

Creating an event

As a last test let's try to trigger an event. We open a shell to one

of the falco DaemonSet pods and execute a suspicious command:

$ oc rsh falco-2ssgx

Defaulted container "falco" out of: falco, falcoctl-artifact-follow, falco-driver-loader (init), falcoctl-artif# cat /etc/shadow

root:*:19639:0:99999:7:::

daemon:*:19639:0:99999:7:::

bin:*:19639:0:99999:7:::

sys:*:19639:0:99999:7:::

sync:*:19639:0:99999:7:::

games:*:19639:0:99999:7:::

man:*:19639:0:99999:7:::

lp:*:19639:0:99999:7:::

mail:*:19639:0:99999:7:::

news:*:19639:0:99999:7:::

uucp:*:19639:0:99999:7:::

proxy:*:19639:0:99999:7:::

www-data:*:19639:0:99999:7:::

backup:*:19639:0:99999:7:::

list:*:19639:0:99999:7:::

irc:*:19639:0:99999:7:::

_apt:*:19639:0:99999:7:::

nobody:*:19639:0:99999:7:::

#and we can see an event with priority Warning in the Falco ui.

That's it, seems like Falco is successfully running on OpenShift 4.12.

Copyright © 2020 - 2024 Toni Schmidbauer & Thomas Jungbauer

Toni Schmidbauer

Toni Schmidbauer