Running Falco on OpenShift 4.12

- By: Toni Schmidbauer ( Lastmod: 2023-11-29 ) - 3 min read

As mentioned in our previous post about Falco, Falco is a security tool to monitor kernel events like system calls or Kubernetes audit logs to provide real-time alerts.

In this post I'll show to customize Falco for a specific use case. We would like to monitor the following events:

- An interactive shell is opened in a container

- Log all commands executed in an interactive shell in a container

- Log read and writes to files within an interactive shell inside a container

- Log commands execute via `kubectl/oc exec` which leverage the

pod/execK8s endpoint

The rules we created for those kind of events are available here.

Deploying custom rules and disabling the default ruleset

Falco comes with a quite elaborate ruleset for creating security relevant events. But for our use case we just want to deploy a specific set of rules (see the list above).

As we are deploying Falco via Helm, we use the following values for `rules_files`:

falco:

rules_file:

- /etc/falco/extra-rules.d

- /etc/falco/rules.dThis instructs Falco to only load rules from the directories mentioned above.

We use a Kustomize configMapGenerator to create a Kubernetes ConfigMap

from our custom rules file:

configMapGenerator:

- name: falco-extra-rules

options:

disableNameSuffixHash: true

files:

- falco-extra-rules.yamlThe complete Kustomize configuration is here.

Furthermore we instruct Falco to mount our custom rule ConfigMap

created above in the Helm values file:

mounts:

volumes:

- name: falco-extra-rules-volume

optional: true

configMap:

name: falco-extra-rules

volumeMounts:

- mountPath: /etc/falco/extra-rules.d

name: falco-extra-rules-volumeThe complete Helm values files is available here.

Disable automatic rule updates

Falco updates all rules when it starts (via an initContainer) and also updates those rules on a regular basis. We would also like to disable this behavior:

falcoctl:

artifact:

install:

enabled: false

follow:

enabled: falseCreate events for kubectl/oc exec

One problem problem is monitoring pod exec events. Using Falcos eBPF monitoring capabilities we found no way to limit those events to pod exec's. This might be because the Falco rule language is new to us and maybe there is a way to use eBPF filtering. Just let us know if you find a solution!

But we came up with a different way of capturing pod/exec events:

Falco also allows monitoring Kubernetes audit events, logged by the

kube-apiserver. Every time you hit the pod/exec endpoint, K8s logs the

following event in the audit log:

{"kind":"Event","apiVersion":"audit.k8s.io/v1","level":"Metadata","auditID":"5c19c1d0-00a7-4af5-a236-5345b5963581","stage":"ResponseComplete","requestURI":"/api/v1/namespaces/falco/pods/falco-8mqj7/exec?command=cat\u0026command=%2Fetc%2Ffalco%2Fextra-rules.d%2Ffalco-extra-rules.yaml\u0026container=falco\u0026stderr=true\u0026stdout=true","verb":"create","user":{"username":"root","uid":"d82ec74a-75e3-4798-a084-4b766dcea5ef","groups":["cluster-admins","system:authenticated:oauth","system:authenticated"],"extra":{"scopes.authorization.openshift.io":["user:full"]}},"sourceIPs":["10.0.32.220"],"userAgent":"oc/4.13.0 (linux/amd64) kubernetes/92b1a3d","objectRef":{"resource":"pods","namespace":"falco","name":"falco-8mqj7","apiVersion":"v1","subresource":"exec"},"responseStatus":{"metadata":{},"code":101},"requestReceivedTimestamp":"2023-11-13T17:23:16.999602Z","stageTimestamp":"2023-11-13T17:23:17.231121Z","annotations":{"authorization.k8s.io/decision":"allow","authorization.k8s.io/reason":"RBAC: allowed by ClusterRoleBinding \"root-cluster-admin\" of ClusterRole \"cluster-admin\" to User \"root\""}}As you can hopefully see, the command executed is available in the requestURI field.

So we enabled the k8saudit Falco plugin and created an additional rule for those kind of events.

falco:

plugins:

- name: k8saudit

library_path: libk8saudit.so

init_config:

# maxEventSize: 262144

# webhookMaxBatchSize: 12582912

# sslCertificate: /etc/falco/falco.pem

# open_params: "http://:9765/k8s-audit"

open_params: "/host/var/log/kube-apiserver/audit.log"

- name: json

library_path: libjson.so

init_config: ""Implementing event routing

We had an additional requirement to route events based on the following rules:

- Events that do not contain sensitive data (like usernames) should go to a specific Kafka topic

- Events that do contain sensitive data (like usernames) should be routed to another Kafka topic

Our first thought was to leverage Falcosidekick's minimumpriority field for routing. Events with sensitive data would get a higher priority. But the sink with a lower minimumpriority would get events with higher priority as well, which means events with sensitive data.

Furthermore as far as we know Falco currently only supports one Kafka configuration (we need two for two topics).

At this point in time we are not aware of a possibility to implement this with Falco or Falcosidekick directly.

There are some discussions upstream on implementing such a feature:

- https://github.com/falcosecurity/falcosidekick/issues/161

- https://github.com/falcosecurity/falcosidekick/issues/161#issuecomment-747714289

- https://github.com/falcosecurity/falcosidekick/issues/224

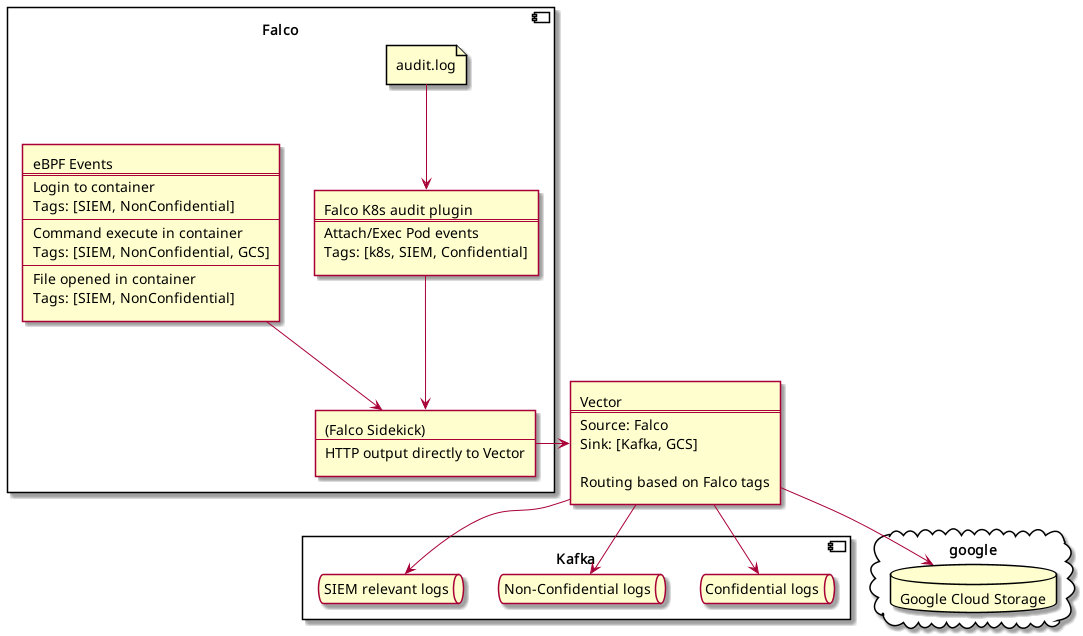

Our current idea is to use Vector for event routing. We will try to implement the following pipeline:

Tips and Tricks

Monitor Redis disk usage

One small hint when using falcosidekick-ui to debug/monitor events. It

happened to us that the Redis volume was full and suddenly we couldn't

see new events in the UI.

We stopped the UI and Redis pods, removed the PVC and just ran our kustomization again, to recreate the PVC and the pods.

Monitor falco pod logs when changing rules

It's always wise to monitor one Falco pod for errors when deploying new rules, for example at one point we hit the following error:

{"hostname":"falco-2hlkm","output":"Falco internal: hot restart failure: /etc/falco/extra-rules.d/falco-extra-rules.yaml: Invalid\n1 Errors:\nIn rules content: (/etc/falco/extra-rules.d/falco-extra-rules.yaml:0:0)\n rule 'Terminal shell in container': (/etc/falco/extra-rules.d/falco-extra-rules.yaml:25:2)\n condition expression: (\"spawned_process a...\":26:71)\n------\n...ocess and container and shell_procs and proc.tty != 0 and container_entrypoint\n ^\n------\nLOAD_ERR_VALIDATE (Error validating rule/macro/list/exception objects): Undefined macro 'container_entrypoint' used in filter.\n","output_fields":{},"priority":"Critical","rule":"Falco internal: hot restart failure","source":"internal","time":"2023-11-13T11:47:14.639547735Z"}Falco is quite resilient when it comes to errors in rules files and provides useful hints on what might be wrong:

Undefined macro 'container_entrypoint' used in filterSo we just added the missing macro and all was swell again.

Copyright © 2020 - 2024 Toni Schmidbauer & Thomas Jungbauer

Built with Hugo Learn Theme and Hugo