Topology Spread Constraints

- - 3 min read

Topology spread constraints is a new feature since Kubernetes 1.19 (OpenShift 4.6) and another way to control where pods shall be started. It allows to use failure-domains, like zones or regions or to define custom topology domains. It heavily relies on configured node labels, which are used to define topology domains. This feature is a more granular approach than affinity, allowing to achieve higher availability.

Pod Placement Series

Please check out other ways of pod placements:

Understanding Topology Spread Constraints

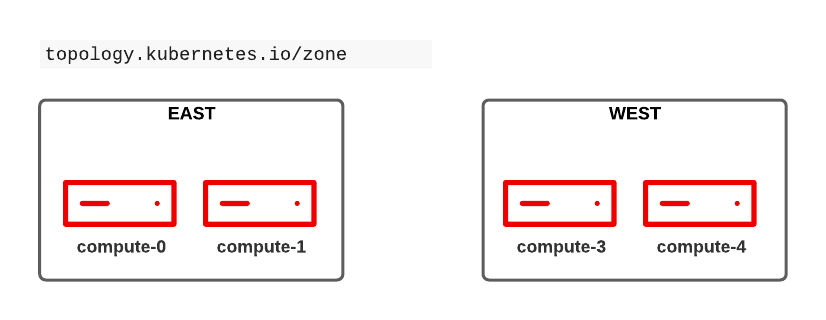

Imagine, like for pod affinity, we have two zones (east and west) in our 4 compute node cluster.

These zones are defined by node labels, which can be created with the following commands:

oc label nodes compute-0 compute-1 topology.kubernetes.io/zone=east

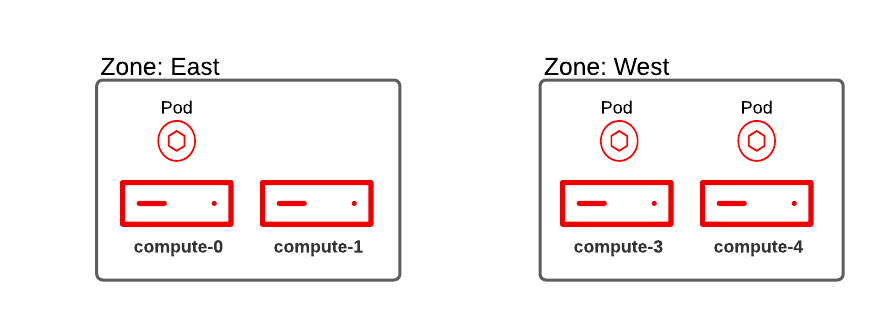

oc label nodes compute-2 compute-3 topology.kubernetes.io/zone=westNow let’s scale our web application to 3 pods. The OpenShift scheduler will try to evenly spread the pods accross the cluster:

oc get pods -n podtesting -o wide

django-psql-example-31-jrhsr 0/1 Running 0 8s 10.130.2.112 compute-2 <none> <none>

django-psql-example-31-q66hk 0/1 Running 0 8s 10.131.1.219 compute-3 <none> <none>

django-psql-example-31-xv7jc 0/1 Running 0 8s 10.128.3.115 compute-0 <none> <none>

If a new pod is started the scheduler may try to start it on compute-1. However, this is done based on best effort. The scheduler does not guarantee that and may try to start it on one of the nodes in zone "West". With the configuration topologySpreadConstraints this can be controlled and incoming pods can only be scheduled on a node of zone "East" (either on compute-0 or compute-1).

Configure TopologySpreadContraint

Let’s configure our topology by adding the following into the DeploymentConfig:

spec:

[...]

template:

[...]

topologySpreadConstraints:

- maxSkew: 1

topologyKey: topology.kubernetes.io/zone

whenUnsatisfiable: DoNotSchedule

labelSelector:

matchLabels:

name: django-psql-exampleThis defines the following parameter:

maxSkew- defines the degree to which pods may be unevenly distributed (must be greater than zero). Depending on thewhenUnsatisfiableparameter the bahaviour differs:whenUnsatisfiable == DoNotSchedule: would not schedule if the maxSkew is is not met.

whenUnsatisfiable == ScheduleAnyway: the scheduler will still schedule and gives the topology which would decrease the skew a better score

topologyKey- key of node lableswhenUnsatisfiable- defines what to do with a pod if the spread constraint is not met. Can be eitherDoNotSchedule(default) orScheduleAnywaylableSelector- used to find matching pods. Found pods are considered to be part of the topology domain. In our example we simply use a default label of the application:name: django-psql-example

If now a 4th pod shall be started the topologySpreadConstraints will allow this pod on one of the nodes of zone=east (either compute-0 or compute-1). It cannot be scheduled on nodes in zone=west since it would violate the maxSkew: 1

Pod distribution would be 1 in zone east and 3 on zone west. --> the skew would then be 3 - 1 = 2 which is not equal to 1

Configure multiple TopologySpreadContraint

It is possible to configure multiple TopologySpreadConstraints. In such a case all contrains must meet the requirement (logical AND). For example:

spec:

[...]

template:

[...]

topologySpreadConstraints:

- maxSkew: 1

topologyKey: topology.kubernetes.io/zone

whenUnsatisfiable: DoNotSchedule

labelSelector:

matchLabels:

name: django-psql-example

- maxSkew: 1

topologyKey: kubernetes.io/hostname

whenUnsatisfiable: DoNotSchedule

labelSelector:

matchLabels:

name: django-psql-exampleThe first part is identical to our first example and would allow the schedule to start a pod in topology.kubernetes.io/zone: east (compute-0 or compute-1). The second configuration defines not a zone but a node hostname. Now the scheduler can only deploy into zone=east AND onto node=compute-1

Conflicts with multiple TopologySpreadConstraints

If you use multiple constraints conflicts are possible. For example, you have a 3 node cluster and the 2 zones east and west. In such cases the maxSkew might be increased or the whenUnsatisfiable might be set to ScheduleAnyway

Cleanup

Remove the topologySpreadConstraint

spec:

[...]

template:

[...]

topologySpreadConstraints:

- maxSkew: 1

topologyKey: topology.kubernetes.io/zone

whenUnsatisfiable: DoNotSchedule

labelSelector:

matchLabels:

name: django-psql-exampleCopyright © 2020 - 2024 Toni Schmidbauer & Thomas Jungbauer

Thomas Jungbauer

Thomas Jungbauer