Pod Affinity/Anti-Affinity

- - 6 min read

While noteSelector provides a very easy way to control where a pod shall be scheduled, the affinity/anti-affinity feature, expands this configuration with more expressive rules like logical AND operators, constraints against labels on other pods or soft rules instead of hard requirements.

The feature comes with two types:

pod affinity/anti-affinity - allows constrains against other pod labels rather than node labels.

node affinity - allows pods to specify a group of nodes they can be placed on

Pod Placement Series

Please check out other ways of pod placements:

Pod Affinity and Anti-Affinity

Affinity and Anti-Affinity controls the nodes on which a pod should (or should not) be scheduled based on labels on Pods that are already scheduled on the node. This is a different approach than nodeSelector, since it does not directly take the node labels into account. That said, one example for such setup would be: You have dedicated nodes for developement and production workload and you have to be sure that pods of dev or prod applications do not run on the same node.

Affinity and Anti-Affinity are shortly defined as:

Pod affinity - tells scheduler to put a new pod onto the same node as other pods (selection is done using label selectors)

For example:

I want to run where this other labelled pod is already running (Pods from same service shall be running on same node.)

Pod Anti-Affinity - prevents the scheduler to place a new pod onto the same nodes with pods with the same labels

For example:

I definitely do not want to start a pod where this other pod with the defined label is running (to prevent that all Pods of same service are running in the same availability zone.)

As described in the official Kubernetes documention two things should be considered:

|

Using Affinity/Anti-Affinity

Currently two types of pod affinity/anti-affinity are known:

requiredDuringSchedulingIgnoreDuringExecuption (short required) - a hard requirement which must be met before a pod can be scheduled

preferredDuringSchedulingIgnoredDuringExecution (short preferred) - a soft requirement the scheduler tries to meet, but does not guarantee it

| Both types can be defined in the same specification. In such case the node must first meet the required rule and then attempt based on best effort to meet the preferred rule. |

Preparing node labels

| Remember the prerequisites explained in the . Pod Placement - Introduction. We have 4 compute nodes and an example web application up and running. |

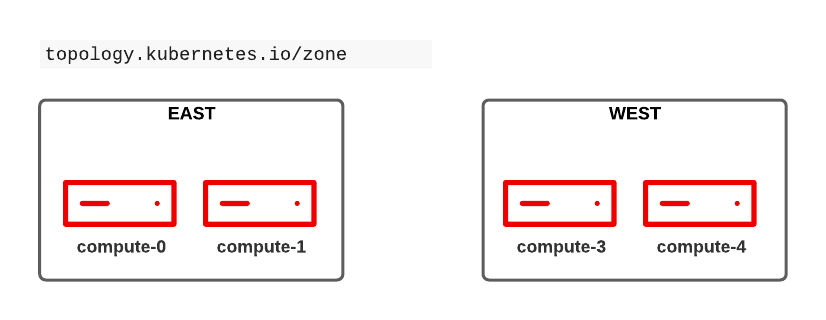

Before we start with affinity rules we need to label all nodes. Let’s create 2 zones (east and west) for our compute nodes using the well-known label topology.kubernetes.io/zone

oc label nodes compute-0 compute-1 topology.kubernetes.io/zone=east

oc label nodes compute-2 compute-3 topology.kubernetes.io/zone=westConfigure pod affinity rule

In our example we have one database pod and multiple web application pods. Let’s image we would like to always run these pods in the same zone.

The pod affinity defines that a pod can be scheduled onto a node ONLY if that node is in the same zone as at least one already-running pod with a certain label.

This means we must first label the postgres pod accordingly. Let’s labels the pod with security=zone1

oc patch dc postgresql -n podtesting --type='json' -p='[{"op": "add", "path": "/spec/template/metadata/labels/security", "value": "zone1" }]'After a while the postgres pod is restarted on one of the 4 compute nodes (since we did not specify in which zone this single pod shall be started) - here compute-1 (zone == east):

oc get pods -n podtesting -o wide | grep Running

postgresql-5-6v5h6 1/1 Running 0 119s 10.129.2.24 compute-1 <none> <none>As a second step, the deployment configuration of the web application must be modified. Remember, we want to run web application pods only on nodes located in the same zone as the postgres pods. In our example this would be either compute-0 or compute-1.

Modify the config accordingly:

kind: DeploymentConfig

apiVersion: apps.openshift.io/v1

[...]

namespace: podtesting

spec:

[...]

template:

[...]

spec:

[...]

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: security (1)

operator: In (2)

values:

- zone1 (3)

topologyKey: topology.kubernetes.io/zone (4)| 1 | The key of the label of a pod which is already running on that node is "security" |

| 2 | As operator "In" is used the postgres pod must have a matching key (security) containing the value (zone1). Other options like "NotIn", "DoesNotExist" or "Exact" are available as well |

| 3 | The value must be "zone1" |

| 4 | As topology the topology.kubernetes.io/zone is used. The application can be deployed on nodes with the same label |

Setting this (and maybe scaling the replicas up a little bit) will start all frontend pods either on compute-0 or on compute-1.

In other words: On nodes of the same zone, where the postgres pod with the label security=zone1 is running.

oc get pods -n podtesting -o wide | grep Running

django-psql-example-13-4w6qd 1/1 Running 0 67s 10.128.2.58 compute-0 <none> <none>

django-psql-example-13-655dj 1/1 Running 0 67s 10.129.2.28 compute-1 <none> <none>

django-psql-example-13-9d4pj 1/1 Running 0 67s 10.129.2.27 compute-1 <none> <none>

django-psql-example-13-bdwhb 1/1 Running 0 67s 10.128.2.61 compute-0 <none> <none>

django-psql-example-13-d4jrw 1/1 Running 0 67s 10.128.2.57 compute-0 <none> <none>

django-psql-example-13-dm9qk 1/1 Running 0 67s 10.128.2.60 compute-0 <none> <none>

django-psql-example-13-ktmfm 1/1 Running 0 67s 10.129.2.25 compute-1 <none> <none>

django-psql-example-13-ldm56 1/1 Running 0 77s 10.128.2.55 compute-0 <none> <none>

django-psql-example-13-mh2f5 1/1 Running 0 67s 10.129.2.29 compute-1 <none> <none>

django-psql-example-13-qfkhq 1/1 Running 0 67s 10.129.2.26 compute-1 <none> <none>

django-psql-example-13-v88qv 1/1 Running 0 67s 10.128.2.56 compute-0 <none> <none>

django-psql-example-13-vfgf4 1/1 Running 0 67s 10.128.2.59 compute-0 <none> <none>

postgresql-5-6v5h6 1/1 Running 0 3m18s 10.129.2.24 compute-1 <none> <none>Configure pod anti-affinity rule

For now the database pod and the web application pod are running on nodes of the same zone. However, somebody is asking us to configure it vice versa: the web application should not run in the same zone as postgresql.

Here we can use the Anti-Affinity feature.

| As an alternative, it would also be possible to change the operator in the affinity rule from "In" to "NotIn" |

kind: DeploymentConfig

apiVersion: apps.openshift.io/v1

[...]

namespace: podtesting

spec:

[...]

template:

[...]

spec:

[...]

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: security (1)

operator: In (2)

values:

- zone1 (3)

topologyKey: topology.kubernetes.io/zone (4)This will force the web application pods to run only on "west" zone nodes.

django-psql-example-16-4n9h5 1/1 Running 0 40s 10.131.1.53 compute-3 <none> <none>

django-psql-example-16-blf8b 1/1 Running 0 29s 10.130.2.63 compute-2 <none> <none>

django-psql-example-16-f9plb 1/1 Running 0 29s 10.130.2.64 compute-2 <none> <none>

django-psql-example-16-tm5rm 1/1 Running 0 28s 10.131.1.55 compute-3 <none> <none>

django-psql-example-16-x8lbh 1/1 Running 0 29s 10.131.1.54 compute-3 <none> <none>

django-psql-example-16-zb5fg 1/1 Running 0 28s 10.130.2.65 compute-2 <none> <none>

postgresql-5-6v5h6 1/1 Running 0 18m 10.129.2.24 compute-1 <none> <none>Combining required and preferred affinities

It is possible to combine requiredDuringSchedulingIgnoredDuringExecution and preferredDuringSchedulingIgnoredDuringExecution. In such case the required affinity MUST be met, while the preferred affinity is tried to be met. The following examples combines these two types in an affinity and anti-affinity specification.

The podAffinity block defines the same as above: schedule the pod on a node of the same zone, where a pod with the label security=zone1 is running.

The podAntiAffinity defines that the pod should not be started on a node if that node has a pod running with the label security=zone2. However, the scheduler might decide to do so as long the podAffinity rule is met.

spec:

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: security

operator: In

values:

- zone1

topologyKey: topology.kubernetes.io/zone

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100 (1)

podAffinityTerm:

labelSelector:

matchExpressions:

- key: security

operator: In

values:

- zone2

topologyKey: topology.kubernetes.io/zone| 1 | The weight field is used by the scheduler to create a scoring. The higher the scoring the more preferred is that node. |

topologyKey

It is important to understand the topologyKey setting. This is the key for the node label. If an affinity rule is met, Kubernetes will try to find suitable nodes which are labelled with the topologyKey. All nodes must be labelled consistently, otherwise unintended behaviour might occur.

As described in the Kubernetes documentation at Pod Affinity and Anit-Affinity, the topologyKey has some constraints:

Quote Kubernetes:

For pod affinity, empty

topologyKeyis not allowed in bothrequiredDuringSchedulingIgnoredDuringExecutionandpreferredDuringSchedulingIgnoredDuringExecution.For pod anti-affinity, empty

topologyKeyis also not allowed in bothrequiredDuringSchedulingIgnoredDuringExecutionandpreferredDuringSchedulingIgnoredDuringExecution.For

requiredDuringSchedulingIgnoredDuringExecutionpod anti-affinity, the admission controllerLimitPodHardAntiAffinityTopologywas introduced to limittopologyKeytokubernetes.io/hostname. If you want to make it available for custom topologies, you may modify the admission controller, or disable it.Except for the above cases, the

topologyKeycan be any legally label-key.

End of quote

Cleanup

As cleanup simply remove the affinity specification from the DeploymentConf. The node labels can stay as they are since they do not hurt.

Summary

This concludes the quick overview of the pod affinity. The next chapter will discuss Node Affinity rules, which allows affinity based on node specifications.

Copyright © 2020 - 2024 Toni Schmidbauer & Thomas Jungbauer

Thomas Jungbauer

Thomas Jungbauer