Compliance Operator

- - 7 min read

OpenShift comes out of the box with a highly secure operating system, called Red Hat CoreOS. This OS is immutable, which means that no direct changes are done inside the OS, instead any configuration is managed by OpenShift itself using MachineConfig objects. Nevertheless, hardening certain settings must still be considered. Red Hat released a hardening guide (CIS Benchmark) which can be downloaded at https://www.cisecurity.org/.

However, an automated way to perform such checks would be nice too. To achieve this the Compliance Operator can be leveraged, which runs an OpenSCAP check to create reports of the clusters is compliant or as the official documentation describes:

The Compliance Operator lets OpenShift Container Platform administrators describe the desired compliance state of a cluster and provides them with an overview of gaps and ways to remediate them. The Compliance Operator assesses compliance of both the Kubernetes API resources of OpenShift Container Platform, as well as the nodes running the cluster. The Compliance Operator uses OpenSCAP, a NIST-certified tool, to scan and enforce security policies provided by the content.

This article shall show how to quickly install the operator and retrieve the first result. It is not a full documentation, which is written by other people at: Compliance Operator, especially remediation is not covered here.

As prerequisites we have:

Installed OpenShift 4.6+ cluster

| The Compliance Operator is available for Red Hat Enterprise Linux CoreOS (RHCOS) deployments only. |

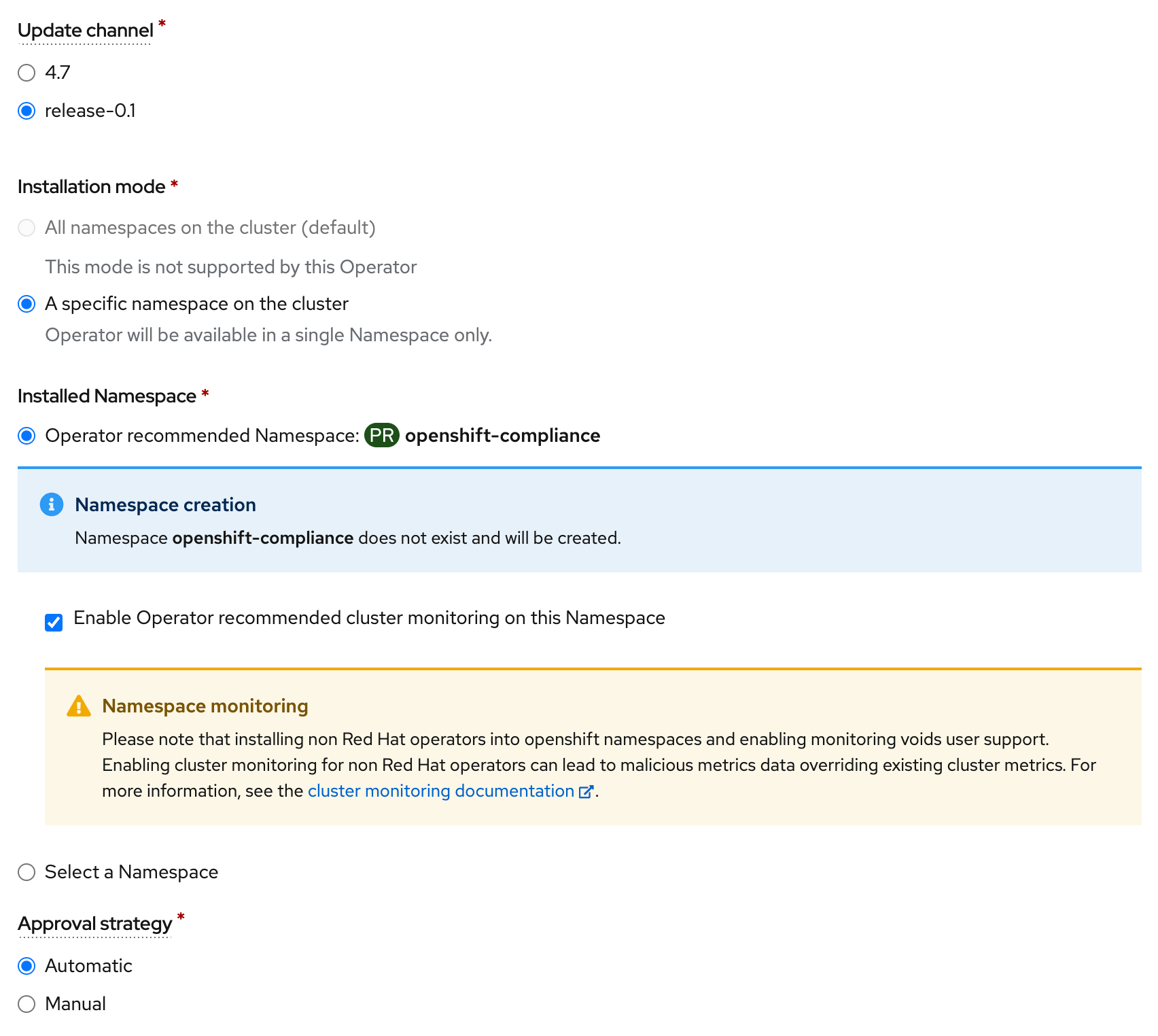

Install the Compliance Operator

The easiest way to deploy the Compliance Operator is by searching the OperatorHub which is available inside OpenShift.

Keep the default settings and wait until the operator has been installed.

Custom Resources (CRDs)

The operator brings a ton of new CRDs into the system:

ScanSetting … defines when and on which roles (worker, master …) a check shall be executed. It also defines a persistent volume (PV) to store the scan results. Two ScanSettings are created during the installation:

default: just scans without automatically apply changes

default-auto-apply: can automatically remediate without extra steps

ScanSettingBinding … binds one or more profiles to a scan

Profile … Represent different compliance benchmarks with a set of rules. For this blog we will use CIS Benchmark profiles

ProfileBundle … Bundles a security image, which is later used by Profiles.

Rule … Rules which are used by profiles to verify the state of the cluster.

TailoredProfile … Customized profile

ComplianceScan … scans which have been performed

ComplianceCheckResult … The results of a scan. Each ComplianceCheckResult represents the result of one compliance rule check

ComplianceRemediation … If a rule ca be remediated automatically, this object is created.

Create a ScanBinding object

The first step to do is to create a ScanBiding objects. (We reuse the default ScanSetting)

Let’s create the following object, which is using the profiles ocp4-cis and ocp4-cis-node

apiVersion: compliance.openshift.io/v1alpha1

kind: ScanSettingBinding

metadata:

name: cis-compliance

profiles:

- name: ocp4-cis-node (1)

kind: Profile

apiGroup: compliance.openshift.io/v1alpha1

- name: ocp4-cis (2)

kind: Profile

apiGroup: compliance.openshift.io/v1alpha1

settingsRef:

name: default (3)

kind: ScanSetting

apiGroup: compliance.openshift.io/v1alpha1| 1 | use the profile ocp4-cis-node |

| 2 | use the profile ocp4-cis |

| 3 | reference to the default scansetting |

As soon as the object is created the cluster is scan is started. The objects ComplianceSuite and ComplianceScan are created automatically and will eventually reach the phase "DONE" when the scan is completed.

The following command will show the results of the scans

oc get compliancescan -n openshift-compliance

NAME PHASE RESULT

ocp4-cis DONE NON-COMPLIANT

ocp4-cis-node-master DONE NON-COMPLIANT

ocp4-cis-node-worker DONE INCONSISTENTThree different checks have been done. One overall cluster check and 2 separated for master and worker nodes.

As we used the default ScanSetting the next check will run a 1 am.

Profiles

The operator comes with a set of standard profiles which represent different compliance benchmarks.

To view available profiles:

oc get profiles.compliance -n openshift-complianceNAME AGE

ocp4-cis 28m

ocp4-cis-node 28m

ocp4-e8 28m

ocp4-moderate 28m

rhcos4-e8 28m

rhcos4-moderate 28mEach profile contains a description which explains the intention and a list of rules which used in this profile.

For example the profile 'ocp4-cis-node' used above is containing:

oc get profiles.compliance -n openshift-compliance -oyaml ocp4-cis-node

# Output

description: This profile defines a baseline that aligns to the Center for Internet Security® Red

Hat OpenShift Container Platform 4 Benchmark™, V0.3, currently unreleased. This profile includes

Center for Internet Security® Red Hat OpenShift Container Platform 4 CIS Benchmarks™ content.

Note that this part of the profile is meant to run on the Operating System that Red Hat

OpenShift Container Platform 4 runs on top of. This profile is applicable to OpenShift versions

4.6 and greater.

[...]

name: ocp4-cis-node

namespace: openshift-compliance

[...]

rules:

- ocp4-etcd-unique-ca

- ocp4-file-groupowner-cni-conf

- ocp4-file-groupowner-controller-manager-kubeconfig

- ocp4-file-groupowner-etcd-data-dir

- ocp4-file-groupowner-etcd-data-files

- ocp4-file-groupowner-etcd-member

- ocp4-file-groupowner-etcd-pki-cert-files

- ocp4-file-groupowner-ip-allocations

[...]Like the profiles the different rules can be inspected:

oc get rules.compliance -n openshift-compliance ocp4-file-groupowner-etcd-member

-o jsonpath='{"Title: "}{.title}{"\nDescription: \n"}{.description}'

# Output

Title: Verify Group Who Owns The etcd Member Pod Specification File

Description:

To properly set the group owner of /etc/kubernetes/static-pod-resources/etcd-pod-*/etcd-pod.yaml ,

run the command:

$ sudo chgrp root /etc/kubernetes/static-pod-resources/etcd-pod-*/etcd-pod.yamlProfile Customization

Sometimes is it required to modify (tailor) a profile to fit specific needs. With the TailoredProfile object it is possible to enable or disable rules.

In this blog, I just want to share a quick example from the official documentaiton: https://docs.openshift.com/container-platform/4.7/security/compliance_operator/compliance-operator-tailor.html

The following TailoredProfile disables 2 rules and sets a value for another rule:

apiVersion: compliance.openshift.io/v1alpha1

kind: TailoredProfile

metadata:

name: nist-moderate-modified

spec:

extends: rhcos4-moderate

title: My modified NIST moderate profile

disableRules:

- name: rhcos4-file-permissions-node-config

rationale: This breaks X application.

- name: rhcos4-account-disable-post-pw-expiration

rationale: No need to check this as it comes from the IdP

setValues:

- name: rhcos4-var-selinux-state

rationale: Organizational requirements

value: permissiveWorking with scan results

Once a scan finished you probably want to see what the status of the scan is.

As you sse above the cluster failed to be compliant.

oc get compliancescan -n openshift-compliance

NAME PHASE RESULT

ocp4-cis DONE NON-COMPLIANT

ocp4-cis-node-master DONE NON-COMPLIANT

ocp4-cis-node-worker DONE INCONSISTENTRetrieving results via oc command

List all results which can be remediated automatically:

oc get compliancecheckresults -l 'compliance.openshift.io/check-status=FAIL,compliance.openshift.io/automated-remediation' -n openshift-compliance

NAME STATUS SEVERITY

ocp4-cis-api-server-encryption-provider-cipher FAIL medium

ocp4-cis-api-server-encryption-provider-config FAIL medium| Further information about remediation can be found at: Compliance Operator Remediation |

List all results which cannot be remediated automatically and must be fixed manually instead:

oc get compliancecheckresults -l 'compliance.openshift.io/check-status=FAIL,!compliance.openshift.io/automated-remediation' -n openshift-compliance

NAME STATUS SEVERITY

ocp4-cis-audit-log-forwarding-enabled FAIL medium

ocp4-cis-file-permissions-proxy-kubeconfig FAIL medium

ocp4-cis-node-master-file-groupowner-ip-allocations FAIL medium

ocp4-cis-node-master-file-groupowner-openshift-sdn-cniserver-config FAIL medium

ocp4-cis-node-master-file-owner-ip-allocations FAIL medium

ocp4-cis-node-master-file-owner-openshift-sdn-cniserver-config FAIL medium

ocp4-cis-node-master-kubelet-configure-event-creation FAIL medium

ocp4-cis-node-master-kubelet-configure-tls-cipher-suites FAIL medium

ocp4-cis-node-master-kubelet-enable-protect-kernel-defaults FAIL medium

ocp4-cis-node-master-kubelet-eviction-thresholds-set-hard-imagefs-available FAIL medium

ocp4-cis-node-master-kubelet-eviction-thresholds-set-hard-imagefs-inodesfree FAIL medium

ocp4-cis-node-master-kubelet-eviction-thresholds-set-hard-memory-available FAIL medium

ocp4-cis-node-master-kubelet-eviction-thresholds-set-hard-nodefs-available FAIL medium

ocp4-cis-node-master-kubelet-eviction-thresholds-set-hard-nodefs-inodesfree FAIL medium

ocp4-cis-node-master-kubelet-eviction-thresholds-set-soft-imagefs-available FAIL medium

ocp4-cis-node-master-kubelet-eviction-thresholds-set-soft-imagefs-inodesfree FAIL medium

ocp4-cis-node-master-kubelet-eviction-thresholds-set-soft-memory-available FAIL medium

ocp4-cis-node-master-kubelet-eviction-thresholds-set-soft-nodefs-available FAIL medium

ocp4-cis-node-master-kubelet-eviction-thresholds-set-soft-nodefs-inodesfree FAIL medium

ocp4-cis-node-worker-file-groupowner-ip-allocations FAIL medium

ocp4-cis-node-worker-file-groupowner-openshift-sdn-cniserver-config FAIL medium

ocp4-cis-node-worker-file-owner-ip-allocations FAIL medium

ocp4-cis-node-worker-file-owner-openshift-sdn-cniserver-config FAIL medium

ocp4-cis-node-worker-kubelet-configure-event-creation FAIL medium

ocp4-cis-node-worker-kubelet-configure-tls-cipher-suites FAIL medium

ocp4-cis-node-worker-kubelet-enable-protect-kernel-defaults FAIL medium

ocp4-cis-node-worker-kubelet-eviction-thresholds-set-hard-imagefs-available FAIL medium

ocp4-cis-node-worker-kubelet-eviction-thresholds-set-hard-imagefs-inodesfree FAIL medium

ocp4-cis-node-worker-kubelet-eviction-thresholds-set-hard-memory-available FAIL medium

ocp4-cis-node-worker-kubelet-eviction-thresholds-set-hard-nodefs-available FAIL medium

ocp4-cis-node-worker-kubelet-eviction-thresholds-set-hard-nodefs-inodesfree FAIL medium

ocp4-cis-node-worker-kubelet-eviction-thresholds-set-soft-imagefs-available FAIL medium

ocp4-cis-node-worker-kubelet-eviction-thresholds-set-soft-imagefs-inodesfree FAIL medium

ocp4-cis-node-worker-kubelet-eviction-thresholds-set-soft-memory-available FAIL medium

ocp4-cis-node-worker-kubelet-eviction-thresholds-set-soft-nodefs-available FAIL medium

ocp4-cis-node-worker-kubelet-eviction-thresholds-set-soft-nodefs-inodesfree FAIL mediumRetrieving RAW results

Let’s first retrieve the raw result of the scan. For each of the ComplianceScans a volume claim (PVC) is created to store he results. We can use a Pod to mount the volume to download the scan results.

The following PVC have been created on our example:

oc get pvc -n openshift-compliance

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

ocp4-cis Bound pvc-cc026ae3-2f42-4e19-bc55-016c6dd31d22 1Gi RWO managed-nfs-storage 4h17m

ocp4-cis-node-master Bound pvc-3bd47c5e-2008-4759-9d53-ba41b568688d 1Gi RWO managed-nfs-storage 4h17m

ocp4-cis-node-worker Bound pvc-77200e5f-0f15-410c-a4ee-f2fb3e316f84 1Gi RWO managed-nfs-storage 4h17mNow we can create a Pod which mounts all PVCs at once:

apiVersion: "v1"

kind: Pod

metadata:

name: pv-extract

namespace: openshift-compliance

spec:

containers:

- name: pv-extract-pod

image: registry.access.redhat.com/ubi8/ubi

command: ["sleep", "3000"]

volumeMounts: (1)

- mountPath: "/workers-scan-results"

name: workers-scan-vol

- mountPath: "/masters-scan-results"

name: masters-scan-vol

- mountPath: "/ocp4-scan-results"

name: ocp4-scan-vol

volumes: (2)

- name: workers-scan-vol

persistentVolumeClaim:

claimName: ocp4-cis-node-worker

- name: masters-scan-vol

persistentVolumeClaim:

claimName: ocp4-cis-node-master

- name: ocp4-scan-vol

persistentVolumeClaim:

claimName: ocp4-cis| 1 | mount paths |

| 2 | volumesclaims to mount |

This creates a Pod with the PVCs mounted inside:

sh-4.4# ls -la | grep scan

drwxrwxrwx. 3 root root 4096 Jul 20 05:20 master-scan-results

drwxrwxrwx. 3 root root 4096 Jul 20 05:20 ocp4-scan-results

drwxrwxrwx. 3 root root 4096 Jul 20 05:20 workers-scan-resultsWe can download the result-files to our local machine for further auditing. Therefore, we create the folder scan_results in which we copy everything:

mkdir scan-results; cd scan-results

oc -n openshift-compliance cp pv-extract:ocp4-scan-results ocp4-scan-results/.

oc -n openshift-compliance cp pv-extract:workers-scan-results workers-scan-results/.

oc -n openshift-compliance cp pv-extract:masters-scan-results masters-scan-results/.This will download several bzip2 archives for the appropriate scan result.

Once done, you can delete the "download pod" using: oc delete pod pv-extract -n openshift-compliance

Work wth RAW results

So above section described the download of the bzip2 files but what to do with it? First, you can import it into a tool which is able to read openScap reports. Or, secondly, you can use the oscap command to create a html output.

We have downloaded the following files:

./ocp4-scan-results/0/ocp4-cis-api-checks-pod.xml.bzip2

./masters-scan-results/0/ocp4-cis-node-master-master-0-pod.xml.bzip2

./masters-scan-results/0/ocp4-cis-node-master-master-2-pod.xml.bzip2

./masters-scan-results/0/ocp4-cis-node-master-master-1-pod.xml.bzip2

./workers-scan-results/0/ocp4-cis-node-worker-compute-0-pod.xml.bzip2

./workers-scan-results/0/ocp4-cis-node-worker-compute-1-pod.xml.bzip2

./workers-scan-results/0/ocp4-cis-node-worker-compute-3-pod.xml.bzip2

./workers-scan-results/0/ocp4-cis-node-worker-compute-2-pod.xml.bzip2To create the html output (be sure that open-scap is installed on you host):

mkdir html

oscap xccdf generate report ocp4-scan-results/0/ocp4-cis-api-checks-pod.xml.bzip2 >> html/ocp4-cis-api-checks.html

oscap xccdf generate report masters-scan-results/0/ocp4-cis-node-master-master-0-pod.xml.bzip2 >> html/ocp4-cis-node-master-master-0.html

oscap xccdf generate report masters-scan-results/0/ocp4-cis-node-master-master-1-pod.xml.bzip2 >> html/ocp4-cis-node-master-master-1.html

oscap xccdf generate report masters-scan-results/0/ocp4-cis-node-master-master-2-pod.xml.bzip2 >> html/ocp4-cis-node-master-master-2.html

oscap xccdf generate report workers-scan-results/0/ocp4-cis-node-worker-compute-0-pod.xml.bzip2 >> html/ocp4-cis-node-worker-compute-0.html

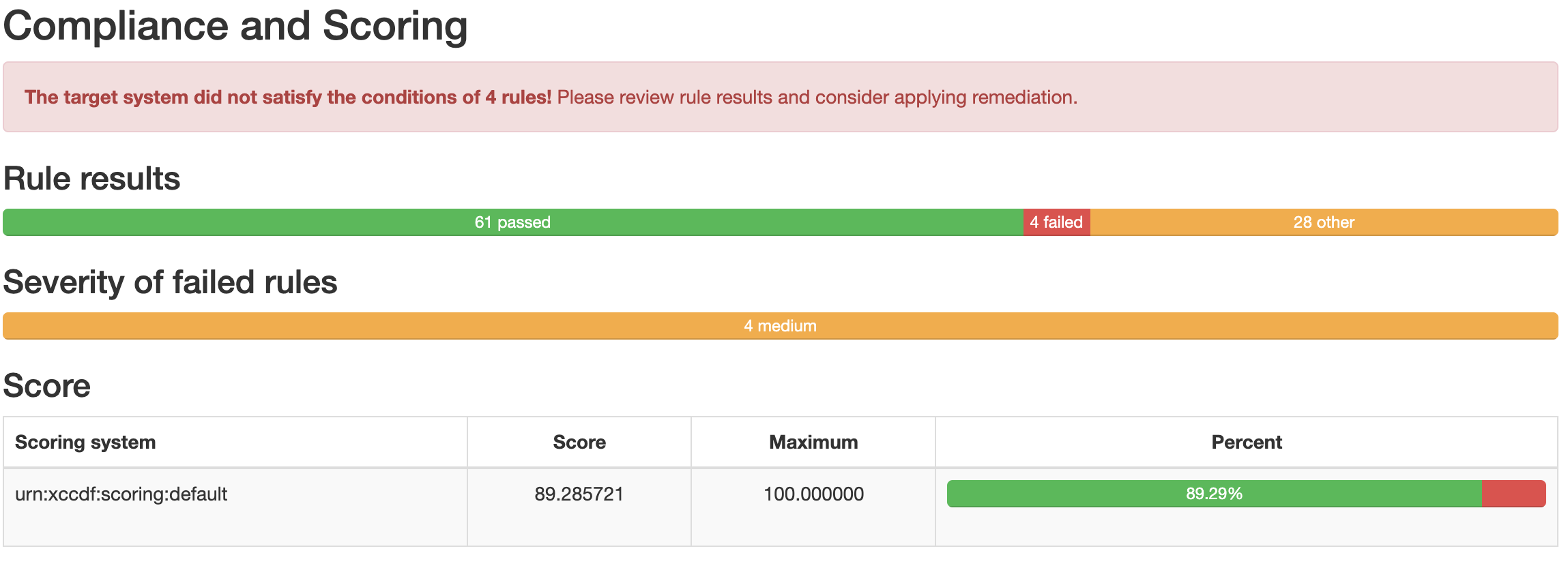

...The resulted html files are too big to be show here, but some snippets should give an overview:

To view the html output as an example I have linked the html files:

Overall Scoring of the result:

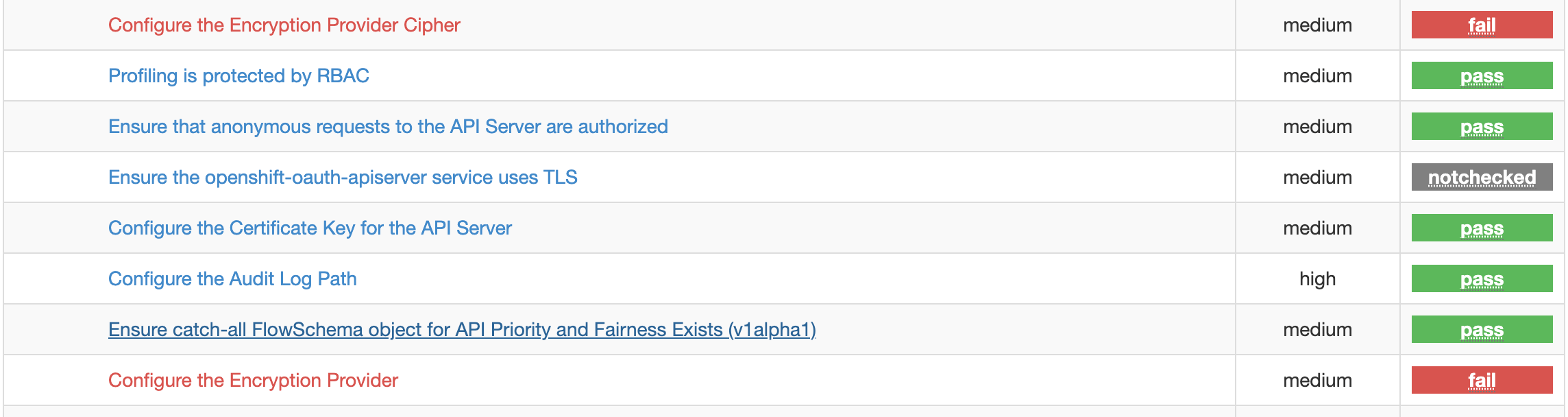

A list if passed or failed checks:

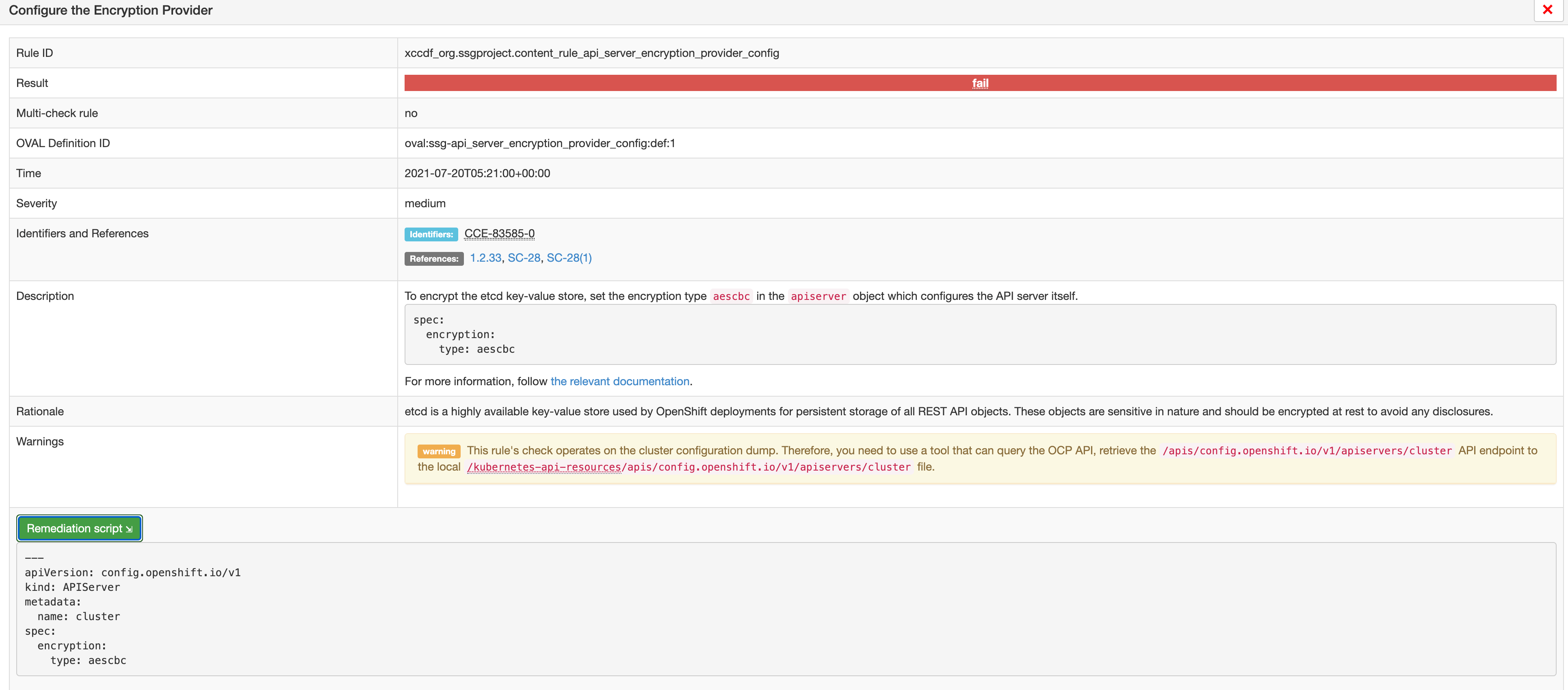

Scan details with a link to the CIS Benchmark section and further explainations on how to fix the issue:

Performing a rescan

If it is necessary to run a rescan, the ComplianceScan object is simply annotated with:

oc annotate compliancescans/<scan_name> compliance.openshift.io/rescan=| If default-auto-apply is enabled, remediation which changes MachineConfigs will trigger a cluster reboot. |

Copyright © 2020 - 2024 Toni Schmidbauer & Thomas Jungbauer

Thomas Jungbauer

Thomas Jungbauer