Articles by Thomas Jungbauer

Articles by Thomas Jungbauer

The Guide to OpenBao - Standalone Installation - Part 2

In the previous article, we introduced OpenBao and its core concepts. Now it is time to get our hands dirty with a standalone installation. This approach is useful for testing, development environments, edge deployments, or scenarios where Kubernetes is not available.

[Ep.15] OpenShift GitOps - Argo CD Agent

OpenShift GitOps based on Argo CD is a powerful tool to manage the infrastructure and applications on an OpenShift cluster. Initially, there were two ways of deployment: centralized and decentralized (or distributed). Both methods had their own advantages and disadvantages. The choice was mainly between scalability and centralization. With OpenShift GitOps v1.19, the Argo CD Agent was finally generally available. This agent tries to solve this problem by bringing the best of both worlds together. In this quite long article, I will show you how to install and configure the Argo CD Agent with OpenShift GitOps using hub and spoke architecture.

The Hitchhiker's Guide to Observability - Here Comes Grafana - Part 7

While we have been using the integrated tracing UI in OpenShift, it is time to summon Grafana. Grafana is a visualization powerhouse that allows teams to build custom dashboards, correlate traces with logs and metrics, and gain deep insights into their applications. In this article, we’ll deploy a dedicated Grafana instance for team-a in their namespace, configure a Tempo datasource, and create a dashboard to explore distributed traces.

The Guide to OpenBao - Introduction - Part 1

I finally had some time to dig into Secret Management. For my demo environments, SealedSecrets is usually enough to quickly test something. But if you want to deploy a real application with Secret Management, you need to think of a more permanent solution.

This article is the first of a series of articles about OpenBao, a HashiCorp Vault fork. Today, we will explore what OpenBao is, why it was created, and when you should consider using it for your secret management needs. If you are familiar with HashiCorp Vault, you will find many similarities, but also some important differences that we will discuss.

The Hitchhiker's Guide to Observability Introduction - Part 1

With this article I would like to summarize and, especially, remember my setup. This is Part 1 of a series of articles that I split up so it is easier to read and understand and not too long. Initially, there will be 6 parts, but I will add more as needed.

The Hitchhiker's Guide to Observability - Grafana Tempo - Part 2

After covering the fundamentals and architecture in Part 1, it’s time to get our hands dirty! This article walks through the complete implementation of a distributed tracing infrastructure on OpenShift.

We’ll deploy and configure the Tempo Operator and a multi-tenant TempoStack instance. For S3 storage we will use the integrated OpenShift Data Foundation. However, you can use whatever S3-compatible storage you have available.

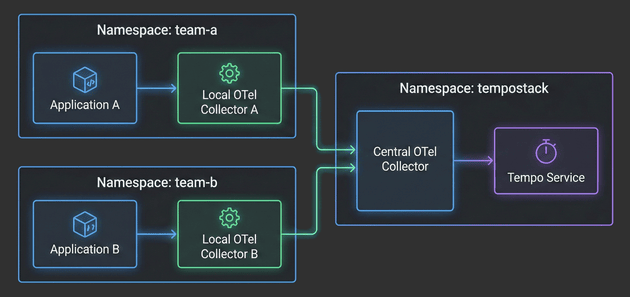

The Hitchhiker's Guide to Observability - Central Collector - Part 3

With the architecture defined in Part 1 and TempoStack deployed in Part 2, it’s time to tackle the heart of our distributed tracing system: the Central OpenTelemetry Collector. This is the critical component that sits between your application namespaces and TempoStack, orchestrating trace flow, metadata enrichment, and tenant routing.

The Hitchhiker's Guide to Observability - Example Applications - Part 4

With the architecture defined, TempoStack deployed, and the Central Collector configured, we’re now ready to complete the distributed tracing pipeline. It’s time to deploy real applications and see traces flowing through the entire system!

In this fourth installment, we’ll focus on the application layer - deploying Local OpenTelemetry Collectors in team namespaces and configuring example applications to generate traces. You’ll see how applications automatically get enriched with Kubernetes metadata, how namespace-based routing directs traces to the correct TempoStack tenants, and how the entire two-tier architecture comes together.

The Hitchhiker's Guide to Observability - Understanding Traces - Part 5

With the architecture established, TempoStack deployed, the Central Collector configured, and applications generating traces, it’s time to take a step back and understand what we’re actually building. Before you deploy more applications and start troubleshooting performance issues, you need to understand how to read and interpret distributed traces.

Let’s decode the matrix of distributed tracing!

The Hitchhiker's Guide to Observability - Adding A New Tenant - Part 6

While we have created our distributed tracing infrastructure, we created two tenants as an example. In this article, I will show you how to add a new tenant and which changes must be made in the TempoStack and the OpenTelemetry Collector.

This article was mainly created as a quick reference guide to see which changes must be made when adding new tenants.

Copyright © 2020 - 2026 Toni Schmidbauer & Thomas Jungbauer

![image from [Ep.15] OpenShift GitOps - Argo CD Agent](https://blog.stderr.at/gitopscollection/images/agent/Logo-ArgoCDAgent.png)